OBJECTIVES:

_ To understand the notion of software engineering and why it is important

_ To appreciate the technical (engineering), managerial, and psychological aspects of software engineering

_ To understand the similarities and differences between software engineering and other engineering disciplines

_ To know the major phases in a software development project

_ To appreciate ethical dimensions in software engineering

_ To be aware of the time frame and extent to which new developments impact software engineering practice

Software engineering concerns methods and techniques to develop large software systems. The engineering metaphor is used to emphasize a systematic approach to develop systems that satisfy organizational requirements and constraints. This section gives a brief overview of the field and points at emerging trends that influence the way software is developed.

Computer science is still a young field. The first computers were built in the mid 1940s, since when the field has developed tremendously.

Applications from the early years of computerization can be characterized as follows: the programs were quite small, certainly when compared to those that are currently being constructed; they were written by one person; they were written and used by experts in the application area concerned. The problems to be solved were mostly of a technical nature, and the emphasis was on expressing known algorithms efficiently in some programming language. Input typically consisted of numerical data, read from such media as punched tape or punched cards. The output, also numeric, was printed on paper. Programs were run off-line. If the program contained errors, the programmer studied an octal or hexadecimal dump of memory. Sometimes, the execution of the program would be followed by binary reading machine registers at the console.

Independent software development companies hardly existed in those days.

Software was mostly developed by hardware vendors and given away for free. These vendors sometimes set up user groups to discuss requirements, and next incorporated them into their software. This software development support was seen as a service to their customers.

Present-day applications are rather different in many respects. Present-day pro grams are often very large and are being developed by teams that collaborate over periods spanning several years. These teams may be scattered across the globe. The programmers are not the future users of the system they develop and they have no expert knowledge of the application area in question. The problems that are being tackled increasingly concern everyday life: automatic bank tellers, airline reservation, salary administration, electronic commerce, automotive systems, etc. Putting a man on the moon was not conceivable without computers.

In the 1960s, people started to realize that programming techniques had lagged behind the developments in software both in size and complexity. To many people, programming was still an art and had never become a craft. An additional problem was that many programmers had not been formally educated in the field. They had learned by doing. On the organizational side, attempted solutions to problems often involved adding more and more programmers to the project, the so-called 'million-monkey' approach.

As a result, software was often delivered too late, programs did not behave as the user expected, programs were rarely adaptable to changed circumstances, and many errors were detected only after the software had been delivered to the customer. This became known as the 'software crisis'.

This type of problem really became manifest in the 1960s. Under the auspices of NATO, two conferences were devoted to the topic in 1968 and 1969 (Naur and Randell, 1968), (Buxton and Randell, 1969).Here, the term 'software engineering' was coined in a somewhat provocative sense. Shouldn't it be possible to build software in the way one builds bridges and houses, starting from a theoretical basis and using sound and proven design and construction techniques, as in other engineering fields? Software serves some organizational purpose. The reasons for embarking on a software development project vary. Sometimes, a solution to a problem is not feasible without the aid of computers, such as weather forecasting, or automated bank telling. Sometimes, software can be used as a vehicle for new technologies, such as typesetting, the production of chips, or manned space trips. In yet other cases software may increase user service (library automation, e-commerce) or simply save money (automated stock control).

In many cases, the expected economic gain will be a major driving force. It may not, however, always be easy to prove that automation saves money (just think of office automation) because apart from direct cost savings, the economic gain may also manifest itself in such things as a more flexible production or a faster or better user service. In either case, it is a value-creating activity.

Boehm (1981) estimated the total expenditure on software in the US to be $40 billion in 1980. This is approximately 2% of the GNP. In 1985, the total expenditure had risen to $70 billion in the US and $140 billion worldwide. Boehm and Sullivan (1999) estimated the annual expenditure on software development in 1998 to be $300-400 billion in the US, and twice that amount worldwide.

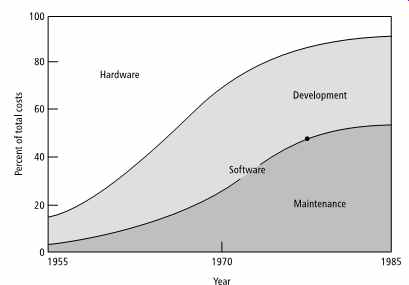

So the cost of software is of crucial importance. This concerns not only the cost of developing the software, but also the cost of keeping the software operational once it has been delivered to the customer. In the course of time, hardware costs have decreased dramatically. Hardware costs now typically comprise less than 20%of total expenditure (FIG. 1). The remaining 80% comprise all non-hardware costs: the cost of programmers, analysts, management, user training, secretarial help, etc.

An aspect closely linked with cost is productivity. In the 1980s, the quest for data processing personnel increased by 12% per year, while the population of people working in data processing and the productivity of those people each grew by approximately 4% per year (Boehm, 1987a). This situation has not fundamentally changed (Jones, 1999). The net effect is a growing gap between demand and supply.

The result is both a backlog with respect to the maintenance of existing software and a slowing down in the development of new applications. The combined effect may have repercussions on the competitive edge of an organization, especially so when there are severe time-to-market constraints. These developments have led to a shift from producing software to using software. We'll come back to this topic in section 6 and section ??.

The issues of cost and productivity of software development deserve our serious attention. However, this is not the complete story. Society is increasingly dependent on software. The quality of the systems we develop increasingly determines the quality of our existence. Consider as an example the following message from a Dutch newspaper on June 6, 1980, under the heading 'Americans saw the Russians coming':

For a short period last Tuesday the United States brought their atomic bombers and nuclear missiles to an increased state of alarm when, because of a computer error, a false alarm indicated that the Soviet Union had started a missile attack.

Efforts to repair the error were apparently in vain, for on June 9, 1980, the same newspaper reported:

For the second time within a few days, a deranged computer reported that the Soviet Union had started a nuclear attack against the United States. Last Saturday, the DoD affirmed the false message, which resulted in the engines of the planes of the strategic air force being started.

It is not always the world that is in danger. On a smaller scale, errors in software may have very unfortunate consequences, such as transaction errors in bank traffic; reminders to finally pay that bill of $0.00; a stock control system that issues orders too late and thus lays off complete divisions of a factory.

FIG. 1 Relative distribution of hardware/software costs. (Source: B.W.

Boehm, Software Engineering, IEEE Transactions on Computers, 1976 IEEE.)

The latter example indicates that errors in a software system may have serious financial consequences for the organization using it. One example of such a financial loss is the large US airline company that lost $50M because of an error in their seat reservation system. The system erroneously reported that cheap seats were sold out, while in fact there were plenty available. The problem was detected only after quarterly results lagged considerably behind those of both their own previous periods and those of their competitors.

Errors in automated systems may even have fatal effects. One computer science weekly magazine contained the following message in April 1983:

The court in Düsseldorf has discharged a woman, who was on trial for murdering her daughter. An erroneous message from a computerized system made the insurance company inform her that she was seriously ill. She was said to suffer from an incurable form of syphilis. Moreover, she was said to have infected both her children. In panic, she strangled her 15 year old daughter and tried to kill her 13 year old son and herself.

The boy escaped, and with some help he enlisted prevented the woman from dying of an overdose. The judge blamed the computer error and considered the woman not responsible for her actions.

This all marks the enormous importance of the field of software engineering.

Better methods and techniques for software development may result in large financial savings, in more effective methods of software development, in systems that better fit user needs, in more reliable software systems, and thus in a more reliable environment in which those systems function. Quality and productivity are two central themes in the field of software engineering.

On the positive side, it is imperative to point to the enormous progress that has been made since the 1960s. Software is ubiquitous and scores of trustworthy systems have been built. These range from small spreadsheet applications to typesetting systems, banking systems, Web browsers and the Space Shuttle software. The techniques and methods discussed in this guide have contributed their mite to the success of these and many other software development projects.

1.1. What is Software Engineering?

In various texts on this topic, one encounters a definition of the term software engineering. An early definition was given at the first NATO conference (Naur and Randell, 1968):

Software engineering is the establishment and use of sound engineering principles in order to obtain economically software that is reliable and works efficiently on real machines.

The definition given in the IEEE Standard Glossary of Software Engineering Terminology (IEEE610, 1990) is as follows:

Software engineering is the application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software; that is, the application of engineering to software.

These and other definitions of the term software engineering use rather different words. However, the essential characteristics of the field are always, explicitly or implicitly, present:

_ Software engineering concerns the development of large programs.

(DeRemer and Kron, 1976) make a distinction between programming-in-the large and programming-in-the-small. The borderline between large and small obviously is not sharp: a program of 100 lines is small, a program of 50 000 lines of code certainly is not. Programming-in-the-small generally refers to programs written by one person in a relatively short period of time. Programming-in-the large, then, refers to multi-person jobs that span, say, more than half a year.

For example:

- The NASA Space Shuttle software contains 40M lines of object code (this is 30 times as much as the software for the Saturn V project from the 1960s) (Boehm, 1981);

- The IBM OS360 operating system took 5000 man years of development effort (Brooks, 1995).

Traditional programming techniques and tools are primarily aimed at supporting programming-in-the-small. This not only holds for programming languages, but also for the tools (like flowcharts) and methods (like structured programming). These cannot be directly transferred to the development of large programs.

In fact, the term program -- in the sense of a self-contained piece of software that can be invoked by a user or some other system component -- is not adequate here. Present-day software development projects result in systems containing a large number of (interrelated) programs -- or components.

_ The central theme is mastering complexity.

In general, the problems are such that they cannot be surveyed in their entirety.

One is forced to split the problem into parts such that each individual part can be grasped, while the communication between the parts remains simple. The total complexity does not decrease in this way, but it does become manageable.

In a stereo system there are components such as an amplifier, a receiver, and a tuner, and communication via a thin wire. In software, we strive for a similar separation of concerns. In a program for library automation, components such as user interaction, search processes and data storage could for instance be distinguished, with clearly given facilities for data exchange between those components. Note that the complexity of many a piece of software is not so much caused by the intrinsic complexity of the problem (as in the case of compiler optimization algorithms or numerical algorithms to solve partial differential equations), but rather by the vast number of details that must be dealt with.

_ Software evolves.

Most software models a part of reality, such as processing requests in a library or tracking money transfers in a bank. This reality evolves. If software is not to become obsolete fairly quickly, it has to evolve with the reality that is being modeled. This means that costs are incurred after delivery of the software system and that we have to bear this evolution in mind during development.

_ The efficiency with which software is developed is of crucial importance.

Total cost and development time of software projects is high. This also holds for the maintenance of software. The quest for new applications surpasses the workforce resource. The gap between supply and demand is growing. Time to-market demands ask for quick delivery. Important themes within the field of software engineering concern better and more efficient methods and tools for the development and maintenance of software, especially methods and tools enabling the use and reuse of components.

_ Regular cooperation between people is an integral part of programming-in-the-large.

Since the problems are large, many people have to work concurrently at solving those problems. Increasingly often, teams at different geographic locations work together in software development. There must be clear arrangements for the distribution of work, methods of communication, responsibilities, and so on. Arrangements alone are not sufficient, though; one also has to stick to those arrangements. In order to enforce them, standards or procedures may be employed. Those procedures and standards can often be supported by tools. Discipline is one of the keys to the successful completion of a software development project.

_ The software has to support its users effectively.

Software is developed in order to support users at work. The functionality offered should fit users' tasks. Users that are not satisfied with the system will try to circumvent it or, at best, voice new requirements immediately. It is not sufficient to build the system in the right way, we also have to build the right system. Effective user support means that we must carefully study users at work, in order to determine the proper functional requirements, and we must address usability and other quality aspects as well, such as reliability, responsiveness, and user-friendliness. It also means that software development entails more than delivering software. User manuals and training material may have to be written, and attention must be given to developing the environment in which the new system is going to be installed. For example, a new automated library system will affect working procedures within the library.

_ Software engineering is a field in which members of one culture create artifacts on behalf of members of another culture.

This aspect is closely linked to the previous two items. Software engineers are expert in one or more areas such as programming in Java, software architecture, testing, or the Unified Modeling Language. They are generally not experts in library management, avionics, or banking. Yet they have to develop systems for such domains. The thin spread of application domain knowledge is a common source of problems in software development projects.

Not only do software engineers lack factual knowledge of the domain for which they develop software, they lack knowledge of its culture as well. For example, a software developer may discover the 'official' set of work practices of a certain user community from interviews, written policies, and the like; these work practices are then built into the software. A crucial question with respect to system acceptance and success, however, is whether that community actually follows those work practices. For an outside observer, this question is much more difficult to answer.

_ Software engineering is a balancing act.

In most realistic cases, it is illusive to assume that the collection of requirements voiced at the start of the project is the only factor that counts. In fact, the term requirement is a misnomer. It suggests something immutable, while in fact most requirements are negotiable. There are numerous business, technical and political constraints that may influence a software development project.

For example, one may decide to use database technology X rather than Y, simply because of available expertise with that technology. In extreme cases, characteristics of available components may determine functionality offered, rather than the other way around.

The above list shows that software engineering has many facets. Software engineering certainly is not the same as programming, although programming is an important ingredient of software engineering. Mathematical aspects play a role since we are concerned with the correctness of software. Sound engineering practices are needed to get useful products. Psychological and sociological aspects play a role in the communication between human and machine, organization and machine, and between humans. Finally, the development process needs to be controlled, which is a management issue.

The term 'software engineering' hints at possible resemblances between the construction of programs and the construction of houses or bridges. These kinds of resemblances do exist. In both cases we work from a set of desired functions, using scientific and engineering techniques in a creative way. Techniques that have been applied successfully in the construction of physical artifacts are also helpful when applied to the construction of software systems: development of the product in a number of phases, a careful planning of these phases, continuous audit of the whole process, construction from a clear and complete design, etc.

Even in a mature engineering discipline, say bridge design, accidents do happen.

Bridges collapse once in a while. Most problems in bridge design occur when designers extrapolate beyond their models and expertise. A famous example is the Tacoma Narrows Bridge failure in 1940. The designers of that bridge extrapolated beyond their experience to create more flexible stiffening girders for suspension bridges. They did not think about aerodynamics and the response of the bridge to wind. As a result, that bridge collapsed shortly after it was finished. This type of extrapolation seems to be the rule rather than the exception in software development. We regularly embark on software development projects that go far beyond our expertise.

There are additional reasons for considering the construction of software as something quite different from the construction of physical products. The cost of constructing software is incurred during development and not during production.

Copying software is almost free. Software is logical in nature rather than physical.

Physical products wear out in time and therefore have to be maintained. Software does not wear out. The need to maintain software is caused by errors detected late or by changing requirements of the user. Software reliability is determined by the manifestation of errors already present, not by physical factors such as wear and tear.

We may even argue that software wears out because it is being maintained.

Viewing software engineering as a branch of engineering is problematic for another reason as well. The engineering metaphor hints at disciplined work, proper planning, good management, and the like. It suggests we deal with clearly defined needs, that can be fulfilled if we follow all the right steps. Many software development projects though involve the translation of some real world phenomenon into digital form. The knowledge embedded in this real life phenomenon is tacit, undefined, uncodified, and may have developed over a long period of time. The assumption that we are dealing with a well-defined problem simply does not hold. Rather, the design process is open ended, and the solution emerges as we go along. This dichotomy is reflected in views of the field put in the forefront over time. In the early days, the field was seen as a craft. As a countermovement, the term software engineering was coined, and many factory concepts got introduced. In the late 1990's, the pendulum swung back again and the craft aspect got emphasized anew, in the agile movement (see section 3). Both engineering-like and craft-like aspects have their place, and we will give a balanced treatment of both.

Two characteristics that make software development projects extra difficult to manage are visibility and continuity. It is much more difficult to see progress in software construction than it is to notice progress in building a bridge. One often hears the phrase that a program 'is almost finished'. One equally often underestimates the time needed to finish up the last bits and pieces.

This '90% complete' syndrome is very pervasive in software development. Not knowing how to measure real progress, we often use a surrogate measure, the rate of expenditure of resources. For example, a project that has a budget of 100 person days is perceived as being 50% complete after 50 person-days are expended. Strictly speaking, we then confuse speed with progress. Because of the imprecise measurement of progress and the customary underestimation of total effort, problems accumulate as time elapses.

Physical systems are often continuous in the sense that small changes in the specification lead to small changes in the product. This is not true with software.

Small changes in the specification of software may lead to considerable changes in the software itself. In a similar way, small errors in software may have considerable effects. The Mariner space rocket to Venus for example got lost because of a typing error in a FORTRAN program. In 1998, the Mars Climate Orbiter got lost, because one development team used English units such as inches and feet, while another team used metric units.

We may likewise draw a comparison between software engineering and computer science. Computer science emerged as a separate discipline in the 1960s. It split from mathematics and has been heavily influenced by mathematics. Topics studied in computer science, such as algorithm complexity, formal languages, and the semantics of programming languages, have a strong mathematical flavor. PhD theses in computer science invariably contain theorems with accompanying proofs.

As the field of software engineering emerged from computer science, it had a similar inclination to focus on clean aspects of software development that can be formalized, in both teaching and research. We used to assume that requirements can be fully stated before the project started, concentrated on systems built from scratch, and ignored the reality of trading off quality aspects against the available budget. Not to mention the trenches of software maintenance.

Software engineering and computer science do have a considerable overlap. The practice of software engineering however also has to deal with such matters as the management of huge development projects, human factors (regarding both the development team and the prospective users of the system) and cost estimation and control. Software engineers must engineer software.

Software engineering has many things in common both with other fields of engineering and with computer science. It also has a face of its own in many ways.

2. Phases in the Development of Software

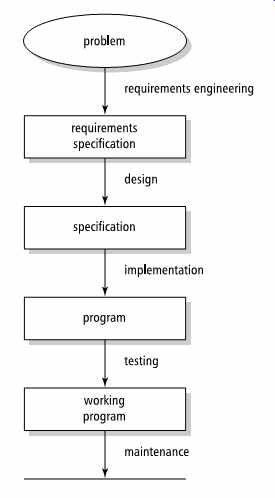

FIG. 2 A simple view of software development

When building a house, the builder does not start with piling up bricks. Rather, the requirements and possibilities of the client are analyzed first, taking into account such factors as family structure, hobbies, finances and the like. The architect takes these factors into consideration when designing a house. Only after the design has been agreed upon is the actual construction started.

It is expedient to act in the same way when constructing software. First, the problem to be solved is analyzed and the requirements are described in a very precise way. Then a design is made based on these requirements. Finally, the construction process, i.e. the actual programming of the solution, is started. There are a distinguishable number of phases in the development of software. The phases as discussed in this guide are depicted in FIG. 2.

The process model depicted in FIG. 2 is rather simple. In reality, things will usually be more complex. For instance, the design phase is often split into a global, architectural design phase and a detailed design phase, and often various test phases are distinguished. The basic elements, however, remain as given in FIG. 2. These phases have to be passed through in each project. Depending on the kind of project and the working environment, a more detailed scheme may be needed.

In FIG. 2, the phases have been depicted sequentially. For a given project these activities are not necessarily separated as strictly as indicated here. They may and usually will overlap. It is, for instance, quite possible to start implementation of one part of the system while some of the other parts have not been fully designed yet.

As we will see in section 3, there is no strict linear progression from requirements engineering to design, from design to implementation, etc. Backtracking to earlier phases occurs, because of errors discovered or changing requirements. One had better think of these phases as a series of workflows. Early on, most resources are spent on the requirements engineering workflow. Later on, effort moves to the implementation and testing work flows.

Below, a short description is given of each of the basic elements from FIG. 2.

Various alternative process models will be discussed in section 3. These alternative models result from justifiable criticism of the simple-minded model depicted in FIG. 2. The sole aim of our simple model is to provide an adequate structuring of topics to be addressed. The maintenance phase is further discussed in section 3.

All elements of our process model will be treated much more elaborately in later sections.

Requirements engineering. The goal of the requirements engineering phase is to get a complete description of the problem to be solved and the requirements posed by and on the environment in which the system is going to function. Requirements posed by the environment may include hardware and supporting software or the number of prospective users of the system to be developed. Alternatively, analysis of the requirements may lead to certain constraints imposed on hardware yet to be acquired or to the organization in which the system is to function. A description of the problem to be solved includes such things as:

- the functions of the software to be developed;

- possible future extensions to the system;

- the amount, and kind, of documentation required;

- response time and other performance requirements of the system.

Part of requirements engineering is a feasibility study. The purpose of the feasibility study is to assess whether there is a solution to the problem which is both economically and technically feasible.

The more careful we are during the requirements engineering phase, the larger is the chance that the ultimate system will meet expectations. To this end, the various people (among others, the customer, prospective users, designers, and programmers) involved have to collaborate intensively. These people often have widely different backgrounds, which does not ease communication.

The document in which the result of this activity is laid down is called the requirements specification.

Design. During the design phase, a model of the whole system is developed which, when encoded in some programming language, solves the problem for the user. To this end, the problem is decomposed into manageable pieces called components; the functions of these components and the interfaces between them are specified in a very precise way. The design phase is crucial. Requirements engineering and design are sometimes seen as an annoying introduction to programming, which is often seen as the real work. This attitude has a very negative influence on the quality of the resulting software.

Early design decisions have a major impact on the quality of the final system.

These early design decisions may be captured in a global description of the system, i.e. its architecture. The architecture may next be evaluated, serve as a template for the development of a family of similar systems, or be used as a skeleton for the development of reusable components. As such, the architectural description of a system is an important milestone document in present-day software development projects.

During the design phase we try to separate the what from the how. We concentrate on the problem and should not let ourselves be distracted by implementation concerns.

The result of the design phase, the (technical) specification, serves as a starting point for the implementation phase. If the specification is formal in nature, it can also be used to derive correctness proofs.

Implementation. During the implementation phase, we concentrate on the individual components. Our starting point is the component's specification. It is often necessary to introduce an extra 'design' phase, the step from component specification to executable code often being too large. In such cases, we may take advantage of some high-level, programming-language-like notation, such as a pseudocode. (A pseudocode is a kind of programming language. Its syntax and semantics are in general less strict, so that algorithms can be formulated at a higher, more abstract, level.) It is important to note that the first goal of a programmer should be the development of a well-documented, reliable, easy to read, flexible, correct, program.

The goal is not to produce a very efficient program full of tricks. We will come back to the many dimensions of software quality in section 6.

During the design phase, a global structure is imposed through the introduction of components and their interfaces. In the more classic programming languages, much of this structure tends to get lost in the transition from design to code. More recent programming languages offer possibilities to retain this structure in the final code through the concept of modules or classes.

The result of the implementation phase is an executable program.

Testing. Actually, it is wrong to say that testing is a phase following implementation.

This suggests that you need not bother about testing until implementation is finished.

This is not true. It is even fair to say that this is one of the biggest mistakes you can make.

Attention has to be paid to testing even during the requirements engineering phase. During the subsequent phases, testing is continued and refined. The earlier that errors are detected, the cheaper it is to correct them.

Testing at phase boundaries comes in two flavors. We have to test that the transition between subsequent phases is correct (this is known as verification). We also have to check that we are still on the right track as regards fulfilling user requirements (validation). The result of adding verification and validation activities to the linear model of FIG. 2 yields the so-called waterfall model of software development (see also section 3).

Maintenance. After delivery of the software, there are often errors that have still gone undetected. Obviously, these errors must be repaired. In addition, the actual use of the system can lead to requests for changes and enhancements. All these types of changes are denoted by the rather unfortunate term maintenance. Maintenance thus concerns all activities needed to keep the system operational after it has been delivered to the user.

An activity spanning all phases is project management. Like other projects, software development projects must be managed properly in order to ensure that the product is delivered on time and within budget. The visibility and continuity characteristics of software development, as well as the fact that many software development projects are undertaken with insufficient prior experience, seriously impede project control. The many examples of software development projects that fail to meet their schedule provide ample evidence of the fact that we have by no means satisfactorily dealt with this issue yet. Sections 2--8 deal with major aspects of software project management, such as project planning, team organization, quality issues, cost and schedule estimation.

An important activity not identified separately is documentation. A number of key ingredients of the documentation of a software project will be elaborated upon in the sections to follow. Key components of system documentation include the project plan, quality plan, requirements specification, architecture description, design documentation and test plan. For larger projects, a considerable amount of effort will have to be spent on properly documenting the project. The documentation effort must start early on in the project. In practice, documentation is often seen as a balancing item. Since many projects are pressed for time, the documentation tends to get the worst of it. Software maintainers and developers know this, and adapt their way of working accordingly. As a rule of thumb, Lethbridge et al. (2003) states that, the closer one gets to the code, the more accurate the documentation must be for software engineers to use it. Outdated requirements documents and other high-level documentation may still give valuable clues. They are useful to people who have to learn about a new system or have to develop test cases, for instance. Outdated low-level documentation is worthless, and makes that programmers consult the code rather than its documentation. Since the system will undergo changes after delivery, because of errors that went undetected or changing user requirements, proper and up-to-date documentation is of crucial importance during maintenance.

A particularly noteworthy element of documentation is the user documentation.

Software development should be task-oriented in the sense that the software to be delivered should support users in their task environment. Likewise, the user documentation should be task- oriented. User manuals should not just describe the features of a system, they should help people to get things done. We cannot simply rely on the structure of the interface to organize the user documentation (just as a programming language reference manual is not an appropriate source for learning how to program).

FIG. 3 depicts the relative effort spent on the various activities up to delivery of the system. From this data a very clear trend emerges, the so-called 40--20--40 rule: only 20% of the effort is spent on actually programming (coding) the system, while the preceding phases (requirements engineering and design) and testing each consume about 40% of the total effort.

FIG. 3 Relative effort for the various activities

Depending on specific boundary conditions, properties of the system to be constructed, and the like, variations to this rule can be found. For iterative development projects, the distinction between requirements engineering, design, implementation and (unit) testing gets blurred, for instance. For the majority of projects, however, this rule of thumb is quite workable.

This does not imply that the 40--20--40 rule is the one to be strived for. Errors made during requirements engineering are the ones that are most costly to repair (see also the section on testing). It is far better to put more energy into the requirements engineering phase, than to try to remove errors during the time-consuming testing phase or, worse still, during maintenance. According to (Boehm, 1987b), successful projects follow a 60--15--25 distribution: 60% requirements engineering and design, 15% implementation and 25% testing. The message is clear: the longer you postpone coding, the earlier you are finished.

FIG. 3 does not show the extent of the maintenance effort. When we consider the total cost of a software system over its lifetime, it turns out that, on average, maintenance alone consumes 50--75% of these costs; see also FIG. 1. Thus, maintenance alone consumes more than the various development phases taken together.

3. Maintenance or Evolution

The only thing we maintain is user satisfaction.

Once software has been delivered, it usually still contains errors which, upon discovery, must be repaired. Note that this type of maintenance is not caused by wearing. Rather, it concerns repair of hidden defects. This type of repair is comparable to that encountered after a newly-built house is first occupied.

The story becomes quite different if we start talking about changes or enhancements to the system. Repainting our office or repairing a leak in the roof of our house is called maintenance. Adding a wing to our office is seldom called maintenance.

This is more than a trifling game with words. Over the total lifetime of a software system, more money is spent on maintaining that system than on initial development.

If all these expenses merely concerned the repair of errors made during one of the development phases, our business would be doing very badly indeed. Fortunately, this is not the case.

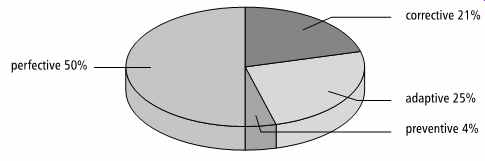

We distinguish four kinds of maintenance activities:

- corrective maintenance -- the repair of actual errors;

- adaptive maintenance -- adapting the software to changes in the environment, such as new hardware or the next release of an operating or database system;

- perfective maintenance -- adapting the software to new or changed user requirements, such as extra functions to be provided by the system. Perfective maintenance also includes work to increase the system's performance or to enhance its user interface;

- preventive maintenance -- increasing the system's future maintainability.

Updating documentation, adding comments, or improving the modular structure of a system are examples of preventive maintenance activities.

Only the first category may rightfully be termed maintenance. This category, how ever, accounts only for about a quarter of the total maintenance effort. Approximately another quarter of the maintenance effort concerns adapting software to environmental changes, while half of the maintenance cost is spent on changes to accommodate changing user requirements, i.e. enhancements to the system (see FIG. 4).

Changes in both the system's environment and user requirements are inevitable.

Software models part of reality, and reality changes, whether we like it or not. So the software has to change too. It has to evolve. A large percentage of what we are used to calling maintenance is actually evolution. Maintenance because of new user requirements occurs in both high and low quality systems. A successful system calls for new, unforeseen functionality, because of its use by many satisfied users. A less successful system has to be adapted in order to satisfy its customers.

FIG. 4 Distribution of maintenance activities

The result is that the software development process becomes cyclic, hence the phrase software life cycle. Backtracking to previous phases, alluded to above, does not only occur during maintenance. During other phases, also, we will from time to time iterate earlier phases. During design, it may be discovered that the requirements specification is not complete or contains conflicting requirements. During testing, errors introduced in the implementation or design phase may crop up. In these and similar cases an iteration of earlier phases is needed. We will come back to this cyclic nature of the software development process in section 3, when we discuss alternative models of the software development process.

NEXT>>

PREV. | NEXT

Also see: